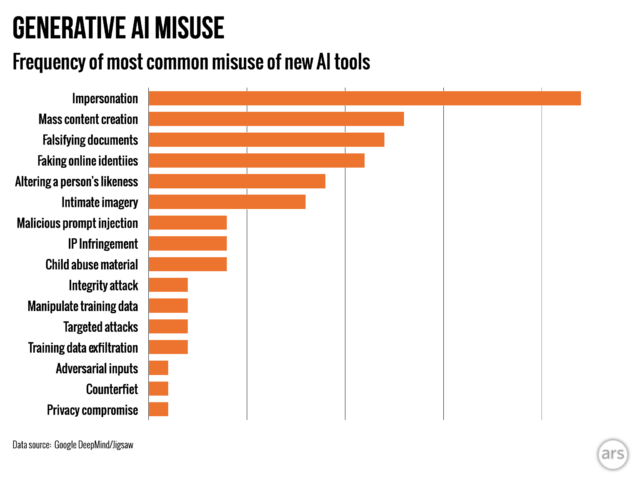

Synthetic intelligence-generated “deepfakes” that impersonate politicians and celebrities are much more prevalent than efforts to make use of AI to help cyber assaults, based on the primary analysis by Google’s DeepMind division into the most typical malicious makes use of of the cutting-edge expertise.

The examine mentioned the creation of real looking however faux photographs, video, and audio of individuals was nearly twice as frequent as the following highest misuse of generative AI instruments: the falsifying of knowledge utilizing text-based instruments, similar to chatbots, to generate misinformation to submit on-line.

The commonest objective of actors misusing generative AI was to form or affect public opinion, the evaluation, carried out with the search group’s analysis and growth unit Jigsaw, discovered. That accounted for 27 p.c of makes use of, feeding into fears over how deepfakes may affect elections globally this 12 months.

Deepfakes of UK Prime Minister Rishi Sunak, in addition to different international leaders, have appeared on TikTok, X, and Instagram in latest months. UK voters go to the polls subsequent week in a common election.

Concern is widespread that, regardless of social media platforms’ efforts to label or take away such content material, audiences could not acknowledge these as faux, and dissemination of the content material might sway voters.

Ardi Janjeva, analysis affiliate at The Alan Turing Institute, referred to as “particularly pertinent” the paper’s discovering that the contamination of publicly accessible data with AI-generated content material might “distort our collective understanding of sociopolitical actuality.”

Janjeva added: “Even when we’re unsure concerning the influence that deepfakes have on voting habits, this distortion could also be tougher to identify within the instant time period and poses long-term dangers to our democracies.”

The examine is the primary of its variety by DeepMind, Google’s AI unit led by Sir Demis Hassabis, and is an try to quantify the dangers from using generative AI instruments, which the world’s greatest expertise firms have rushed out to the general public in quest of large income.

As generative merchandise similar to OpenAI’s ChatGPT and Google’s Gemini develop into extra broadly used, AI firms are starting to watch the flood of misinformation and different probably dangerous or unethical content material created by their instruments.

In Could, OpenAI launched analysis revealing operations linked to Russia, China, Iran, and Israel had been utilizing its instruments to create and unfold disinformation.

“There had been lots of comprehensible concern round fairly subtle cyber assaults facilitated by these instruments,” mentioned Nahema Marchal, lead writer of the examine and researcher at Google DeepMind. “Whereas what we noticed had been pretty frequent misuses of GenAI [such as deepfakes that] may go underneath the radar just a little bit extra.”

Google DeepMind and Jigsaw’s researchers analyzed round 200 noticed incidents of misuse between January 2023 and March 2024, taken from social media platforms X and Reddit, in addition to on-line blogs and media studies of misuse.

Ars Technica

The second commonest motivation behind misuse was to generate income, whether or not providing companies to create deepfakes, together with producing bare depictions of actual individuals, or utilizing generative AI to create swaths of content material, similar to faux information articles.

The analysis discovered that almost all incidents use simply accessible instruments, “requiring minimal technical experience,” which means extra dangerous actors can misuse generative AI.

Google DeepMind’s analysis will affect the way it improves its evaluations to check fashions for security, and it hopes it’ll additionally have an effect on how its opponents and different stakeholders view how “harms are manifesting.”

© 2024 The Monetary Instances Ltd. All rights reserved. To not be redistributed, copied, or modified in any manner.