Generative AI functions have little, or typically unfavorable, worth with out accuracy — and accuracy is rooted in information.

To assist builders effectively fetch the perfect proprietary information to generate educated responses for his or her AI functions, NVIDIA at this time introduced 4 new NVIDIA NeMo Retriever NIM inference microservices.

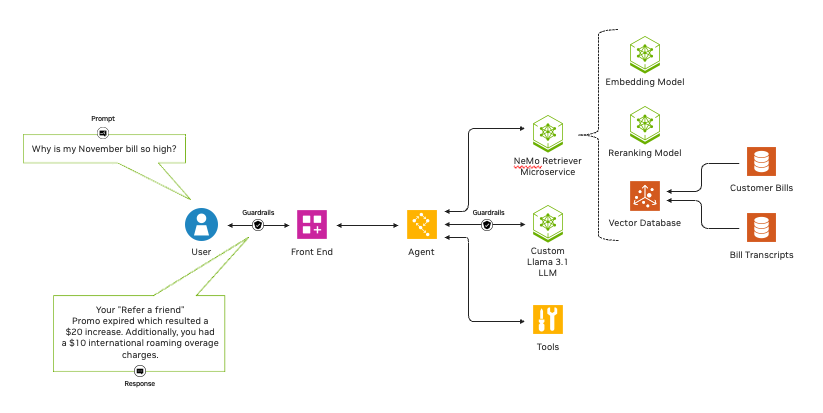

Mixed with NVIDIA NIM inference microservices for the Llama 3.1 mannequin assortment, additionally introduced at this time, NeMo Retriever NIM microservices allow enterprises to scale to agentic AI workflows — the place AI functions function precisely with minimal intervention or supervision — whereas delivering the very best accuracy retrieval-augmented technology, or RAG.

NeMo Retriever permits organizations to seamlessly join customized fashions to various enterprise information and ship extremely correct responses for AI functions utilizing RAG. In essence, the production-ready microservices allow extremely correct data retrieval for constructing extremely correct AI functions.

For instance, NeMo Retriever can increase mannequin accuracy and throughput for builders creating AI brokers and customer support chatbots, analyzing safety vulnerabilities or extracting insights from advanced provide chain data.

NIM inference microservices allow high-performance, easy-to-use, enterprise-grade inferencing. And with NeMo Retriever NIM microservices, builders can profit from all of this — superpowered by their information.

These new NeMo Retriever embedding and reranking NIM microservices are actually typically obtainable:

- NV-EmbedQA-E5-v5, a well-liked group base embedding mannequin optimized for textual content question-answering retrieval

- NV-EmbedQA-Mistral7B-v2, a well-liked multilingual group base mannequin fine-tuned for textual content embedding for high-accuracy query answering

- Snowflake-Arctic-Embed-L, an optimized group mannequin, and

- NV-RerankQA-Mistral4B-v3, a well-liked group base mannequin fine-tuned for textual content reranking for high-accuracy query answering.

They be a part of the gathering of NIM microservices simply accessible by way of the NVIDIA API catalog.

Embedding and Reranking Fashions

NeMo Retriever NIM microservices comprise two mannequin sorts — embedding and reranking — with open and industrial choices that guarantee transparency and reliability.

An embedding mannequin transforms various information — akin to textual content, photos, charts and video — into numerical vectors, saved in a vector database, whereas capturing their which means and nuance. Embedding fashions are quick and computationally inexpensive than conventional massive language fashions, or LLMs.

A reranking mannequin ingests information and a question, then scores the information in accordance with its relevance to the question. Such fashions provide important accuracy enhancements whereas being computationally advanced and slower than embedding fashions.

NeMo Retriever offers the perfect of each worlds. By casting a large internet of knowledge to be retrieved with an embedding NIM, then utilizing a reranking NIM to trim the outcomes for relevancy, builders tapping NeMo Retriever can construct a pipeline that ensures essentially the most useful, correct outcomes for his or her enterprise.

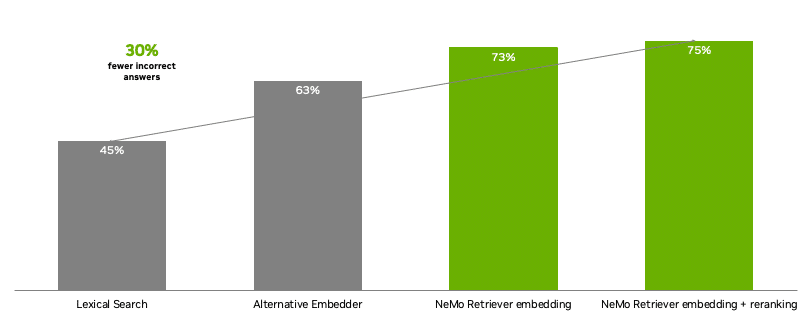

With NeMo Retriever, builders get entry to state-of-the-art open, industrial fashions for constructing textual content Q&A retrieval pipelines that present the very best accuracy. Compared with alternate fashions, NeMo Retriever NIM microservices offered 30% fewer inaccurate solutions for enterprise query answering.

Prime Use Instances

From RAG and AI agent options to data-driven analytics and extra, NeMo Retriever powers a variety of AI functions.

The microservices can be utilized to construct clever chatbots that present correct, context-aware responses. They may also help analyze huge quantities of knowledge to establish safety vulnerabilities. They’ll help in extracting insights from advanced provide chain data. And so they can increase AI-enabled retail buying advisors that provide pure, customized buying experiences, amongst different duties.

NVIDIA AI workflows for these use instances present a simple, supported place to begin for creating generative AI-powered applied sciences.

Dozens of NVIDIA information platform companions are working with NeMo Retriever NIM microservices to spice up their AI fashions’ accuracy and throughput.

DataStax has built-in NeMo Retriever embedding NIM microservices in its Astra DB and Hyper-Converged platforms, enabling the corporate to carry correct, generative AI-enhanced RAG capabilities to clients with sooner time to market.

Cohesity will combine NVIDIA NeMo Retriever microservices with its AI product, Cohesity Gaia, to assist clients put their information to work to energy insightful, transformative generative AI functions by way of RAG.

Kinetica will use NVIDIA NeMo Retriever to develop LLM brokers that may work together with advanced networks in pure language to reply extra shortly to outages or breaches — turning insights into quick motion.

NetApp is collaborating with NVIDIA to attach NeMo Retriever microservices to exabytes of knowledge on its clever information infrastructure. Each NetApp ONTAP buyer will be capable to seamlessly “discuss to their information” to entry proprietary enterprise insights with out having to compromise the safety or privateness of their information.

NVIDIA world system integrator companions together with Accenture, Deloitte, Infosys, LTTS, Tata Consultancy Providers, Tech Mahindra and Wipro, in addition to service supply companions Knowledge Monsters, EXLService (Eire) Restricted, Latentview, Quantiphi, Slalom, SoftServe and Tredence, are creating providers to assist enterprises add NeMo Retriever NIM microservices into their AI pipelines.

Use With Different NIM Microservices

NeMo Retriever NIM microservices can be utilized with NVIDIA Riva NIM microservices, which supercharge speech AI functions throughout industries — enhancing customer support and enlivening digital people.

New fashions that can quickly be obtainable as Riva NIM microservices embody: FastPitch and HiFi-GAN for text-to-speech functions; Megatron for multilingual neural machine translation; and the record-breaking NVIDIA Parakeet household of fashions for computerized speech recognition.

NVIDIA NIM microservices can be utilized all collectively or individually, providing builders a modular method to constructing AI functions. As well as, the microservices may be built-in with group fashions, NVIDIA fashions or customers’ customized fashions — within the cloud, on premises or in hybrid environments — offering builders with additional flexibility.

NVIDIA NIM microservices can be found at ai.nvidia.com. Enterprises can deploy AI functions in manufacturing with NIM by way of the NVIDIA AI Enterprise software program platform.

NIM microservices can run on clients’ most well-liked accelerated infrastructure, together with cloud cases from Amazon Net Providers, Google Cloud, Microsoft Azure and Oracle Cloud Infrastructure, in addition to NVIDIA-Licensed Methods from world server manufacturing companions together with Cisco, Dell Applied sciences, Hewlett Packard Enterprise, Lenovo and Supermicro.

NVIDIA Developer Program members will quickly be capable to entry NIM at no cost for analysis, improvement and testing on their most well-liked infrastructure.

Study extra in regards to the newest in generative AI and accelerated computing by becoming a member of NVIDIA at SIGGRAPH, the premier laptop graphics convention, operating July 28-Aug. 1 in Denver.

See discover concerning software program product data.