Editor’s word: This put up is a part of the AI Decoded collection, which demystifies AI by making the know-how extra accessible, and showcases new {hardware}, software program, instruments and accelerations for RTX PC customers.

Massive language fashions are driving among the most fun developments in AI with their capability to shortly perceive, summarize and generate text-based content material.

These capabilities energy quite a lot of use instances, together with productiveness instruments, digital assistants, non-playable characters in video video games and extra. However they’re not a one-size-fits-all answer, and builders typically should fine-tune LLMs to suit the wants of their purposes.

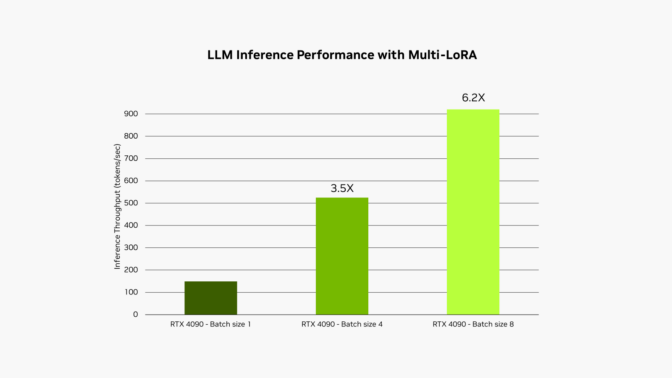

The NVIDIA RTX AI Toolkit makes it simple to fine-tune and deploy AI fashions on RTX AI PCs and workstations by means of a method referred to as low-rank adaptation, or LoRA. A brand new replace, obtainable at the moment, allows help for utilizing a number of LoRA adapters concurrently throughout the NVIDIA TensorRT-LLM AI acceleration library, enhancing the efficiency of fine-tuned fashions by as much as 6x.

High-quality-Tuned for Efficiency

LLMs should be rigorously custom-made to attain increased efficiency and meet rising consumer calls for.

These foundational fashions are skilled on big quantities of information however typically lack the context wanted for a developer’s particular use case. For instance, a generic LLM can generate online game dialogue, however it should possible miss the nuance and subtlety wanted to put in writing within the fashion of a woodland elf with a darkish previous and a barely hid disdain for authority.

To realize extra tailor-made outputs, builders can fine-tune the mannequin with data associated to the app’s use case.

Take the instance of growing an app to generate in-game dialogue utilizing an LLM. The method of fine-tuning begins with utilizing the weights of a pretrained mannequin, similar to data on what a personality could say within the recreation. To get the dialogue in the appropriate fashion, a developer can tune the mannequin on a smaller dataset of examples, similar to dialogue written in a extra spooky or villainous tone.

In some instances, builders could need to run all of those totally different fine-tuning processes concurrently. For instance, they could need to generate advertising copy written in several voices for varied content material channels. On the similar time, they could need to summarize a doc and make stylistic solutions — in addition to draft a online game scene description and imagery immediate for a text-to-image generator.

It’s not sensible to run a number of fashions concurrently, as they received’t all slot in GPU reminiscence on the similar time. Even when they did, their inference time can be impacted by reminiscence bandwidth — how briskly knowledge will be learn from reminiscence into GPUs.

Lo(RA) and Behold

A preferred approach to deal with these points is to make use of fine-tuning strategies similar to low-rank adaptation. A easy mind-set of it’s as a patch file containing the customizations from the fine-tuning course of.

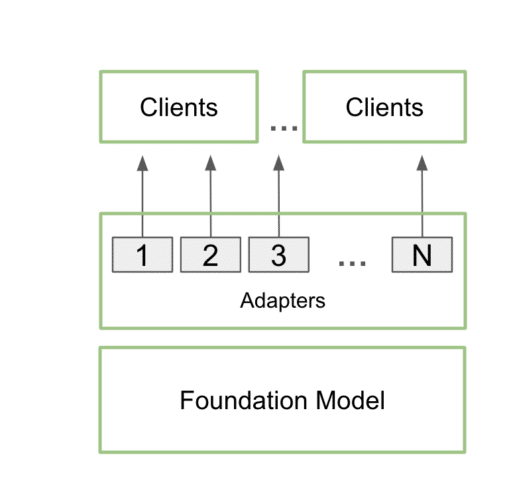

As soon as skilled, custom-made LoRA adapters can combine seamlessly with the inspiration mannequin throughout inference, including minimal overhead. Builders can connect the adapters to a single mannequin to serve a number of use instances. This retains the reminiscence footprint low whereas nonetheless offering the extra particulars wanted for every particular use case.

In apply, which means that an app can preserve only one copy of the bottom mannequin in reminiscence, alongside many customizations utilizing a number of LoRA adapters.

This course of known as multi-LoRA serving. When a number of calls are made to the mannequin, the GPU can course of the entire calls in parallel, maximizing the usage of its Tensor Cores and minimizing the calls for of reminiscence and bandwidth so builders can effectively use AI fashions of their workflows. High-quality-tuned fashions utilizing multi-LoRA adapters carry out as much as 6x sooner.

Within the instance of the in-game dialogue software described earlier, the app’s scope may very well be expanded, utilizing multi-LoRA serving, to generate each story components and illustrations — pushed by a single immediate.

The consumer may enter a fundamental story concept, and the LLM would flesh out the idea, increasing on the thought to offer an in depth basis. The applying may then use the identical mannequin, enhanced with two distinct LoRA adapters, to refine the story and generate corresponding imagery. One LoRA adapter generates a Steady Diffusion immediate to create visuals utilizing a domestically deployed Steady Diffusion XL mannequin. In the meantime, the opposite LoRA adapter, fine-tuned for story writing, may craft a well-structured and interesting narrative.

On this case, the identical mannequin is used for each inference passes, guaranteeing that the house required for the method doesn’t considerably enhance. The second cross, which includes each textual content and picture era, is carried out utilizing batched inference, making the method exceptionally quick and environment friendly on NVIDIA GPUs. This enables customers to quickly iterate by means of totally different variations of their tales, refining the narrative and the illustrations with ease.

This course of is printed in additional element in a latest technical weblog.

LLMs have gotten probably the most essential elements of contemporary AI. As adoption and integration grows, demand for highly effective, quick LLMs with application-specific customizations will solely enhance. The multi-LoRA help added at the moment to the RTX AI Toolkit offers builders a robust new approach to speed up these capabilities.