Information abstract: New libraries in accelerated computing ship order-of-magnitude speedups and cut back power consumption and prices in knowledge processing, generative AI, recommender methods, AI knowledge curation, knowledge processing, 6G analysis, AI-physics and extra. They embody:

- LLM purposes: NeMo Curator, to create customized datasets, provides picture curation and Nemotron-4 340B for high-quality artificial knowledge era

- Information processing: cuVS for vector search to construct indexes in minutes as an alternative of days and a brand new Polars GPU Engine in open beta

- Bodily AI: For physics simulation, Warp accelerates computations with a brand new TIle API. For wi-fi community simulation, Aerial provides extra map codecs for ray tracing and simulation. And for link-level wi-fi simulation, Sionna provides a brand new toolchain for real-time inference

Corporations all over the world are more and more turning to NVIDIA accelerated computing to hurry up purposes they first ran on CPUs solely. This has enabled them to realize excessive speedups and profit from unbelievable power financial savings.

In Houston, CPFD makes computational fluid dynamics simulation software program for industrial purposes, like its Barracuda Digital Reactor software program that helps design next-generation recycling services. Plastic recycling services run CPFD software program in cloud situations powered by NVIDIA accelerated computing. With a CUDA GPU-accelerated digital machine, they’ll effectively scale and run simulations 400x quicker and 140x extra power effectively than utilizing a CPU-based workstation.

A preferred video conferencing software captions a number of hundred thousand digital conferences an hour. When utilizing CPUs to create reside captions, the app might question a transformer-powered speech recognition AI mannequin 3 times a second. After migrating to GPUs within the cloud, the applying’s throughput elevated to 200 queries per second — a 66x speedup and 25x energy-efficiency enchancment.

In houses throughout the globe, an e-commerce web site connects a whole lot of hundreds of thousands of buyers a day to the merchandise they want utilizing a complicated suggestion system powered by a deep studying mannequin, operating on its NVIDIA accelerated cloud computing system. After switching from CPUs to GPUs within the cloud, it achieved considerably decrease latency with a 33x speedup and almost 12x energy-efficiency enchancment.

With the exponential progress of information, accelerated computing within the cloud is ready to allow much more revolutionary use instances.

NVIDIA Accelerated Computing on CUDA GPUs Is Sustainable Computing

NVIDIA estimates that if all AI, HPC and knowledge analytics workloads which might be nonetheless operating on CPU servers had been CUDA GPU-accelerated, knowledge facilities would save 40 terawatt-hours of power yearly. That’s the equal power consumption of 5 million U.S. houses per 12 months.

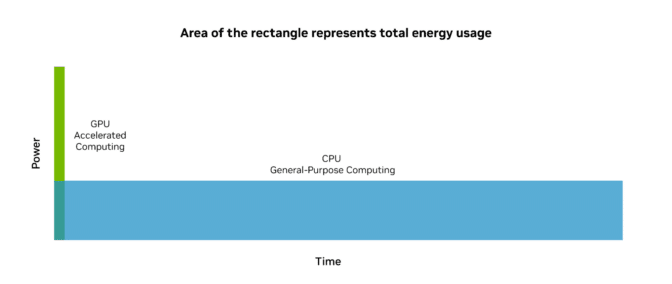

Accelerated computing makes use of the parallel processing capabilities of CUDA GPUs to finish jobs orders of magnitude quicker than CPUs, bettering productiveness whereas dramatically lowering value and power consumption.

Though including GPUs to a CPU-only server will increase peak energy, GPU acceleration finishes duties rapidly after which enters a low-power state. The entire power consumed with GPU-accelerated computing is considerably decrease than with general-purpose CPUs, whereas yielding superior efficiency.

Up to now decade, NVIDIA AI computing has achieved roughly 100,000x extra power effectivity when processing giant language fashions. To place that into perspective, if the effectivity of vehicles improved as a lot as NVIDIA has superior the effectivity of AI on its accelerated computing platform, they’d get 500,000 miles per gallon. That’s sufficient to drive to the moon, and again, on lower than a gallon of gasoline.

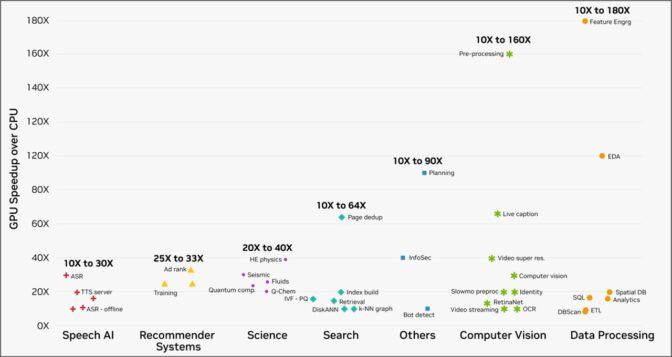

Along with these dramatic boosts in effectivity on AI workloads, GPU computing can obtain unbelievable speedups over CPUs. Clients of the NVIDIA accelerated computing platform operating workloads on cloud service suppliers noticed speedups of 10-180x throughout a gamut of real-world duties, from knowledge processing to pc imaginative and prescient, because the chart beneath reveals.

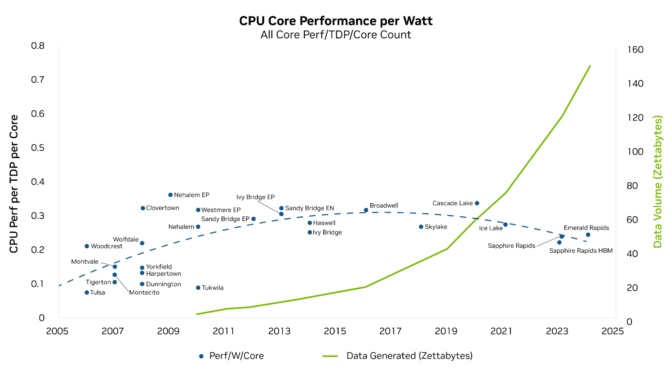

As workloads proceed to demand exponentially extra computing energy, CPUs have struggled to offer the required efficiency, making a rising efficiency hole and driving “compute inflation.” The chart beneath illustrates a multiyear pattern of how knowledge progress has far outpaced the expansion in compute efficiency per watt of CPUs.

The power financial savings of GPU acceleration frees up what would in any other case have been wasted value and power.

With its huge energy-efficiency financial savings, accelerated computing is sustainable computing.

The Proper Instruments for Each Job

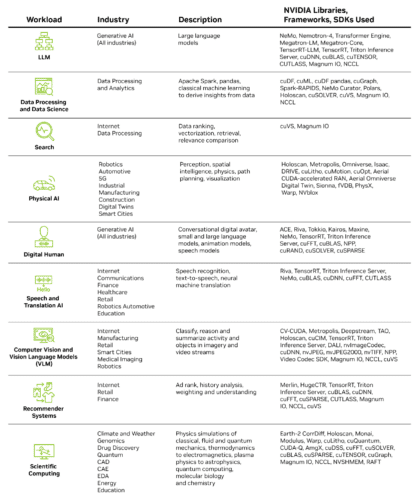

GPUs can’t speed up software program written for general-purpose CPUs. Specialised algorithm software program libraries are wanted to speed up particular workloads. Similar to a mechanic would have a whole toolbox from a screwdriver to a wrench for various duties, NVIDIA offers a various set of libraries to carry out low-level capabilities like parsing and executing calculations on knowledge.

Every NVIDIA CUDA library is optimized to harness {hardware} options particular to NVIDIA GPUs. Mixed, they embody the facility of the NVIDIA platform.

New updates proceed to be added on the CUDA platform roadmap, increasing throughout numerous use instances:

LLM Purposes

NeMo Curator provides builders the pliability to rapidly create customized datasets in giant language mannequin (LLM) use instances. Not too long ago, we introduced capabilities past textual content to broaden to multimodal assist, together with picture curation.

SDG (artificial knowledge era) augments present datasets with high-quality, synthetically generated knowledge to customise and fine-tune fashions and LLM purposes. We introduced Nemotron-4 340B, a brand new suite of fashions particularly constructed for SDG that allows companies and builders to make use of mannequin outputs and construct customized fashions.

Information Processing Purposes

cuVS is an open-source library for GPU-accelerated vector search and clustering that delivers unbelievable pace and effectivity throughout LLMs and semantic search. The newest cuVS permits giant indexes to be inbuilt minutes as an alternative of hours and even days, and searches them at scale.

Polars is an open-source library that makes use of question optimizations and different methods to course of a whole lot of hundreds of thousands of rows of information effectively on a single machine. A brand new Polars GPU engine powered by NVIDIA’s cuDF library will likely be obtainable in open beta. It delivers as much as a 10x efficiency enhance in comparison with CPU, bringing the power financial savings of accelerated computing to knowledge practitioners and their purposes.

Bodily AI

Warp, for high-performance GPU simulation and graphics, helps speed up spatial computing by making it simpler to write down differentiable applications for physics simulation, notion, robotics and geometry processing. The following launch can have assist for a brand new Tile API that enables builders to make use of Tensor Cores inside GPUs for matrix and Fourier computations.

Aerial is a collection of accelerated computing platforms that features Aerial CUDA-Accelerated RAN and Aerial Omniverse Digital Twin for designing, simulating and working wi-fi networks for business purposes and trade analysis. The following launch will embody a brand new growth of Aerial with extra map codecs for ray tracing and simulations with increased accuracy.

Sionna is a GPU-accelerated open-source library for link-level simulations of wi-fi and optical communication methods. With GPUs, Sionna achieves orders-of-magnitude quicker simulation, enabling interactive exploration of those methods and paving the way in which for next-generation bodily layer analysis. The following launch will embody your entire toolchain required to design, practice and consider neural network-based receivers, together with assist for real-time inference of such neural receivers utilizing NVIDIA TensorRT.

NVIDIA offers over 400 libraries. Some, like CV-CUDA, excel at pre- and post-processing of pc imaginative and prescient duties frequent in user-generated video, recommender methods, mapping and video conferencing. Others, like cuDF, speed up knowledge frames and tables central to SQL databases and pandas in knowledge science.

Many of those libraries are versatile — for instance, cuBLAS for linear algebra acceleration — and can be utilized throughout a number of workloads, whereas others are extremely specialised to deal with a particular use case, like cuLitho for silicon computational lithography.

For researchers who don’t wish to construct their very own pipelines with NVIDIA CUDA-X libraries, NVIDIA NIM offers a streamlined path to manufacturing deployment by packaging a number of libraries and AI fashions into optimized containers. The containerized microservices ship improved throughput out of the field.

Augmenting these libraries’ efficiency are an increasing variety of hardware-based acceleration options that ship speedups with the very best power efficiencies. The NVIDIA Blackwell platform, for instance, features a decompression engine that unpacks compressed knowledge information inline as much as 18x quicker than CPUs. This dramatically accelerates knowledge processing purposes that must incessantly entry compressed information in storage like SQL, Apache Spark and pandas, and decompress them for runtime computation.

The combination of NVIDIA’s specialised CUDA GPU-accelerated libraries into cloud computing platforms delivers outstanding pace and power effectivity throughout a variety of workloads. This mix drives important value financial savings for companies and performs an important position in advancing sustainable computing, serving to billions of customers counting on cloud-based workloads to learn from a extra sustainable and cost-effective digital ecosystem.

Be taught extra about NVIDIA’s sustainable computing efforts and take a look at the Power Effectivity Calculator to find potential power and emissions financial savings.

See discover concerning software program product data.