Nations all over the world are pursuing sovereign AI to provide synthetic intelligence utilizing their very own computing infrastructure, information, workforce and enterprise networks to make sure AI techniques align with native values, legal guidelines and pursuits.

In help of those efforts, NVIDIA at this time introduced the supply of 4 new NVIDIA NIM microservices that allow builders to extra simply construct and deploy high-performing generative AI functions.

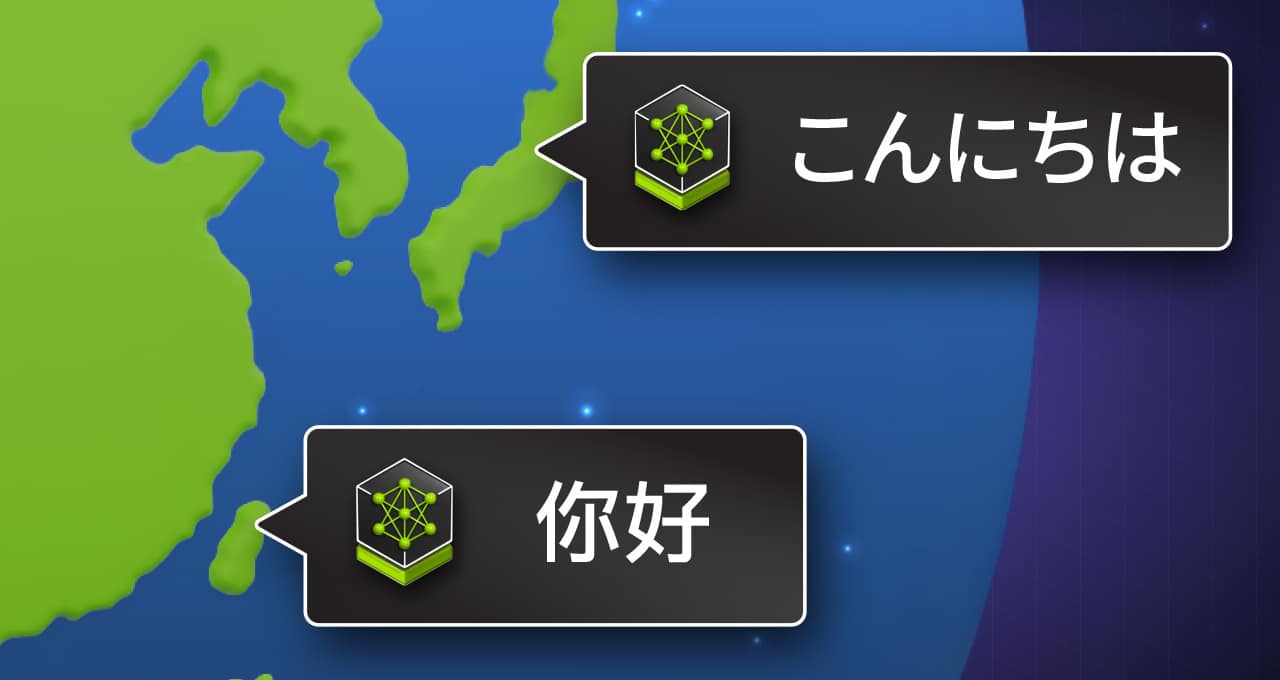

The microservices help widespread group fashions tailor-made to satisfy regional wants. They improve person interactions via correct understanding and improved responses primarily based on native languages and cultural heritage.

Within the Asia-Pacific area alone, generative AI software program income is anticipated to achieve $48 billion by 2030 — up from $5 billion this 12 months, based on ABI Analysis.

Llama-3-Swallow-70B, skilled on Japanese information, and Llama-3-Taiwan-70B, skilled on Mandarin information, are regional language fashions that present a deeper understanding of native legal guidelines, rules and different customs.

The RakutenAI 7B household of fashions, constructed on Mistral-7B, had been skilled on English and Japanese datasets, and can be found as two totally different NIM microservices for Chat and Instruct. Rakuten’s basis and instruct fashions have achieved main scores amongst open Japanese giant language fashions, touchdown the highest common rating within the LM Analysis Harness benchmark carried out from January to March 2024.

Coaching a giant language mannequin (LLM) on regional languages enhances the effectiveness of its outputs by making certain extra correct and nuanced communication, because it higher understands and displays cultural and linguistic subtleties.

The fashions supply main efficiency for Japanese and Mandarin language understanding, regional authorized duties, question-answering, and language translation and summarization in contrast with base LLMs like Llama 3.

Nations worldwide — from Singapore, the United Arab Emirates, South Korea and Sweden to France, Italy and India — are investing in sovereign AI infrastructure.

The brand new NIM microservices permit companies, authorities businesses and universities to host native LLMs in their very own environments, enabling builders to construct superior copilots, chatbots and AI assistants.

Creating Purposes With Sovereign AI NIM Microservices

Builders can simply deploy the sovereign AI fashions, packaged as NIM microservices, into manufacturing whereas attaining improved efficiency.

The microservices, accessible with NVIDIA AI Enterprise, are optimized for inference with the NVIDIA TensorRT-LLM open-source library.

NIM microservices for Llama 3 70B — which was used as the bottom mannequin for the brand new Llama–3-Swallow-70B and Llama-3-Taiwan-70B NIM microservices — can present as much as 5x greater throughput. This lowers the full value of operating the fashions in manufacturing and supplies higher person experiences by reducing latency.

The brand new NIM microservices can be found at this time as hosted utility programming interfaces (APIs).

Tapping NVIDIA NIM for Sooner, Extra Correct Generative AI Outcomes

The NIM microservices speed up deployments, improve total efficiency and supply the mandatory safety for organizations throughout world industries, together with healthcare, finance, manufacturing, schooling and authorized.

The Tokyo Institute of Know-how fine-tuned Llama-3-Swallow 70B utilizing Japanese-language information.

“LLMs will not be mechanical instruments that present the identical profit for everybody. They’re fairly mental instruments that work together with human tradition and creativity. The affect is mutual the place not solely are the fashions affected by the information we prepare on, but additionally our tradition and the information we generate will likely be influenced by LLMs,” stated Rio Yokota, professor on the World Scientific Data and Computing Heart on the Tokyo Institute of Know-how. “Subsequently, it’s of paramount significance to develop sovereign AI fashions that adhere to our cultural norms. The provision of Llama-3-Swallow as an NVIDIA NIM microservice will permit builders to simply entry and deploy the mannequin for Japanese functions throughout numerous industries.”

As an example, a Japanese AI firm, Most well-liked Networks, makes use of the mannequin to develop a healthcare particular mannequin skilled on a singular corpus of Japanese medical information, referred to as Llama3-Most well-liked-MedSwallow-70B, that tops scores on the Japan Nationwide Examination for Physicians.

Chang Gung Memorial Hospital (CGMH), one of many main hospitals in Taiwan, is constructing a custom-made AI Inference Service (AIIS) to centralize all LLM functions throughout the hospital system. Utilizing Llama 3-Taiwan 70B, it’s bettering the effectivity of frontline medical workers with extra nuanced medical language that sufferers can perceive.

“By offering instantaneous, context-appropriate steering, AI functions constructed with local-language LLMs streamline workflows and function a steady studying software to help workers growth and enhance the standard of affected person care,” stated Dr. Changfu Kuo, director of the Heart for Synthetic Intelligence in Medication at CGMH, Linko Department. “NVIDIA NIM is simplifying the event of those functions, permitting for simple entry and deployment of fashions skilled on regional languages with minimal engineering experience.”

Taiwan-based Pegatron, a maker of digital gadgets, will undertake the Llama 3-Taiwan 70B NIM microservice for internal- and external-facing functions. It has built-in it with its PEGAAi Agentic AI System to automate processes, boosting effectivity in manufacturing and operations.

Llama-3-Taiwan 70B NIM can be being utilized by world petrochemical producer Chang Chun Group, world-leading printed circuit board firm Unimicron, technology-focused media firm TechOrange, on-line contract service firm LegalSign.ai and generative AI startup APMIC. These corporations are additionally collaborating on the open mannequin.

Creating Customized Enterprise Fashions With NVIDIA AI Foundry

Whereas regional AI fashions can present culturally nuanced and localized responses, enterprises nonetheless must fine-tune them for his or her enterprise processes and area experience.

NVIDIA AI Foundry is a platform and repair that features widespread basis fashions, NVIDIA NeMo for fine-tuning, and devoted capability on NVIDIA DGX Cloud to offer builders a full-stack answer for making a personalized basis mannequin packaged as a NIM microservice.

Moreover, builders utilizing NVIDIA AI Foundry have entry to the NVIDIA AI Enterprise software program platform, which supplies safety, stability and help for manufacturing deployments.

NVIDIA AI Foundry provides builders the mandatory instruments to extra shortly and simply construct and deploy their very own {custom}, regional language NIM microservices to energy AI functions, making certain culturally and linguistically acceptable outcomes for his or her customers.