Editor’s word: This put up is a part of the AI Decoded sequence, which demystifies AI by making the expertise extra accessible, and showcases new {hardware}, software program, instruments and accelerations for RTX PC customers.

The period of the AI PC is right here, and it’s powered by NVIDIA RTX and GeForce RTX applied sciences. With it comes a brand new method to consider efficiency for AI-accelerated duties, and a brand new language that may be formidable to decipher when selecting between the desktops and laptops obtainable.

Whereas PC avid gamers perceive frames per second (FPS) and comparable stats, measuring AI efficiency requires new metrics.

Coming Out on TOPS

The primary baseline is TOPS, or trillions of operations per second. Trillions is the necessary phrase right here — the processing numbers behind generative AI duties are completely large. Consider TOPS as a uncooked efficiency metric, just like an engine’s horsepower score. Extra is healthier.

Examine, for instance, the not too long ago introduced Copilot+ PC lineup by Microsoft, which incorporates neural processing items (NPUs) in a position to carry out upwards of 40 TOPS. Performing 40 TOPS is ample for some mild AI-assisted duties, like asking a neighborhood chatbot the place yesterday’s notes are.

However many generative AI duties are extra demanding. NVIDIA RTX and GeForce RTX GPUs ship unprecedented efficiency throughout all generative duties — the GeForce RTX 4090 GPU gives greater than 1,300 TOPS. That is the form of horsepower wanted to deal with AI-assisted digital content material creation, AI tremendous decision in PC gaming, producing photos from textual content or video, querying native giant language fashions (LLMs) and extra.

Insert Tokens to Play

TOPS is just the start of the story. LLM efficiency is measured within the variety of tokens generated by the mannequin.

Tokens are the output of the LLM. A token could be a phrase in a sentence, or perhaps a smaller fragment like punctuation or whitespace. Efficiency for AI-accelerated duties could be measured in “tokens per second.”

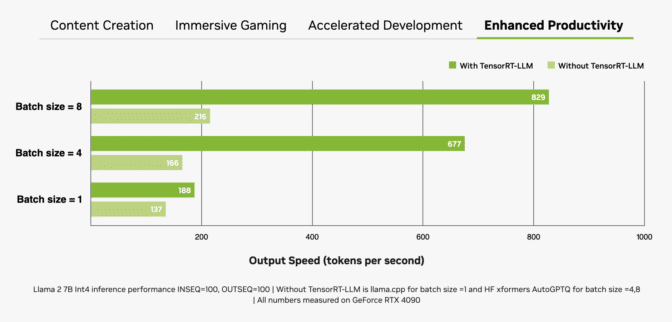

One other necessary issue is batch dimension, or the variety of inputs processed concurrently in a single inference move. As an LLM will sit on the core of many trendy AI programs, the power to deal with a number of inputs (e.g. from a single software or throughout a number of purposes) shall be a key differentiator. Whereas bigger batch sizes enhance efficiency for concurrent inputs, in addition they require extra reminiscence, particularly when mixed with bigger fashions.

RTX GPUs are exceptionally well-suited for LLMs as a result of their giant quantities of devoted video random entry reminiscence (VRAM), Tensor Cores and TensorRT-LLM software program.

GeForce RTX GPUs provide as much as 24GB of high-speed VRAM, and NVIDIA RTX GPUs as much as 48GB, which might deal with bigger fashions and allow larger batch sizes. RTX GPUs additionally make the most of Tensor Cores — devoted AI accelerators that dramatically pace up the computationally intensive operations required for deep studying and generative AI fashions. That most efficiency is well accessed when an software makes use of the NVIDIA TensorRT software program improvement equipment (SDK), which unlocks the highest-performance generative AI on the greater than 100 million Home windows PCs and workstations powered by RTX GPUs.

The mix of reminiscence, devoted AI accelerators and optimized software program provides RTX GPUs large throughput positive factors, particularly as batch sizes improve.

Textual content-to-Picture, Quicker Than Ever

Measuring picture technology pace is one other method to consider efficiency. One of the easy methods makes use of Secure Diffusion, a preferred image-based AI mannequin that enables customers to simply convert textual content descriptions into advanced visible representations.

With Secure Diffusion, customers can rapidly create and refine photos from textual content prompts to realize their desired output. When utilizing an RTX GPU, these outcomes could be generated sooner than processing the AI mannequin on a CPU or NPU.

That efficiency is even larger when utilizing the TensorRT extension for the favored Automatic1111 interface. RTX customers can generate photos from prompts as much as 2x sooner with the SDXL Base checkpoint — considerably streamlining Secure Diffusion workflows.

ComfyUI, one other fashionable Secure Diffusion consumer interface, added TensorRT acceleration final week. RTX customers can now generate photos from prompts as much as 60% sooner, and may even convert these photos to movies utilizing Secure Video Diffuson as much as 70% sooner with TensorRT.

TensorRT acceleration could be put to the check within the new UL Procyon AI Picture Era benchmark, which delivers speedups of fifty% on a GeForce RTX 4080 SUPER GPU in contrast with the quickest non-TensorRT implementation.

TensorRT acceleration will quickly be launched for Secure Diffusion 3 — Stability AI’s new, extremely anticipated text-to-image mannequin — boosting efficiency by 50%. Plus, the brand new TensorRT-Mannequin Optimizer permits accelerating efficiency even additional. This ends in a 70% speedup in contrast with the non-TensorRT implementation, together with a 50% discount in reminiscence consumption.

After all, seeing is believing — the true check is within the real-world use case of iterating on an authentic immediate. Customers can refine picture technology by tweaking prompts considerably sooner on RTX GPUs, taking seconds per iteration in contrast with minutes on a Macbook Professional M3 Max. Plus, customers get each pace and safety with every part remaining personal when working domestically on an RTX-powered PC or workstation.

The Outcomes Are in and Open Sourced

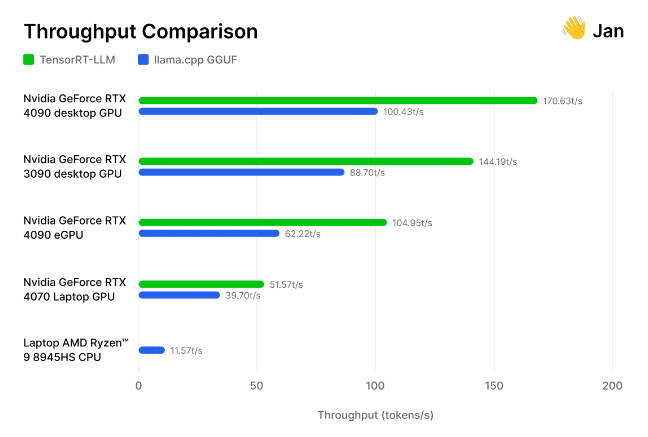

However don’t simply take our phrase for it. The group of AI researchers and engineers behind the open-source Jan.ai not too long ago built-in TensorRT-LLM into its native chatbot app, then examined these optimizations for themselves.

The researchers examined its implementation of TensorRT-LLM towards the open-source llama.cpp inference engine throughout a wide range of GPUs and CPUs utilized by the group. They discovered that TensorRT is “30-70% sooner than llama.cpp on the identical {hardware},” in addition to extra environment friendly on consecutive processing runs. The group additionally included its methodology, inviting others to measure generative AI efficiency for themselves.

From video games to generative AI, pace wins. TOPS, photos per second, tokens per second and batch dimension are all concerns when figuring out efficiency champs.

Generative AI is reworking gaming, videoconferencing and interactive experiences of every kind. Make sense of what’s new and what’s subsequent by subscribing to the AI Decoded publication.