It’s official: NVIDIA delivered the world’s quickest platform in industry-standard checks for inference on generative AI.

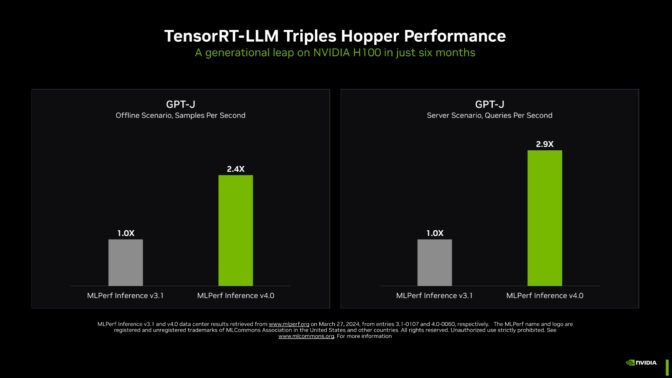

Within the newest MLPerf benchmarks, NVIDIA TensorRT-LLM — software program that speeds and simplifies the complicated job of inference on massive language fashions — boosted the efficiency of NVIDIA Hopper structure GPUs on the GPT-J LLM practically 3x over their outcomes simply six months in the past.

The dramatic speedup demonstrates the facility of NVIDIA’s full-stack platform of chips, programs and software program to deal with the demanding necessities of operating generative AI.

Main corporations are utilizing TensorRT-LLM to optimize their fashions. And NVIDIA NIM — a set of inference microservices that features inferencing engines like TensorRT-LLM — makes it simpler than ever for companies to deploy NVIDIA’s inference platform.

Elevating the Bar in Generative AI

TensorRT-LLM operating on NVIDIA H200 Tensor Core GPUs — the most recent, memory-enhanced Hopper GPUs — delivered the quickest efficiency operating inference in MLPerf’s largest take a look at of generative AI so far.

The brand new benchmark makes use of the biggest model of Llama 2, a state-of-the-art massive language mannequin packing 70 billion parameters. The mannequin is greater than 10x bigger than the GPT-J LLM first used within the September benchmarks.

The memory-enhanced H200 GPUs, of their MLPerf debut, used TensorRT-LLM to supply as much as 31,000 tokens/second, a file on MLPerf’s Llama 2 benchmark.

The H200 GPU outcomes embrace as much as 14% features from a customized thermal resolution. It’s one instance of improvements past commonplace air cooling that programs builders are making use of to their NVIDIA MGX designs to take the efficiency of Hopper GPUs to new heights.

Reminiscence Increase for NVIDIA Hopper GPUs

NVIDIA is sampling H200 GPUs to prospects right this moment and delivery within the second quarter. They’ll be accessible quickly from practically 20 main system builders and cloud service suppliers.

H200 GPUs pack 141GB of HBM3e operating at 4.8TB/s. That’s 76% extra reminiscence flying 43% sooner in comparison with H100 GPUs. These accelerators plug into the identical boards and programs and use the identical software program as H100 GPUs.

With HBM3e reminiscence, a single H200 GPU can run a complete Llama 2 70B mannequin with the best throughput, simplifying and rushing inference.

GH200 Packs Even Extra Reminiscence

Much more reminiscence — as much as 624GB of quick reminiscence, together with 144GB of HBM3e — is packed in NVIDIA GH200 Superchips, which mix on one module a Hopper structure GPU and a power-efficient NVIDIA Grace CPU. NVIDIA accelerators are the primary to make use of HBM3e reminiscence know-how.

With practically 5 TB/second reminiscence bandwidth, GH200 Superchips delivered standout efficiency, together with on memory-intensive MLPerf checks resembling recommender programs.

Sweeping Each MLPerf Check

On a per-accelerator foundation, Hopper GPUs swept each take a look at of AI inference within the newest spherical of the MLPerf {industry} benchmarks.

As well as, NVIDIA Jetson Orin stays on the forefront in MLPerf’s edge class. Within the final two inference rounds, Orin ran probably the most numerous set of fashions within the class, together with GPT-J and Steady Diffusion XL.

The MLPerf benchmarks cowl right this moment’s hottest AI workloads and situations, together with generative AI, advice programs, pure language processing, speech and pc imaginative and prescient. NVIDIA was the one firm to submit outcomes on each workload within the newest spherical and each spherical since MLPerf’s knowledge middle inference benchmarks started in October 2020.

Continued efficiency features translate into decrease prices for inference, a big and rising a part of the each day work for the thousands and thousands of NVIDIA GPUs deployed worldwide.

Advancing What’s Attainable

Pushing the boundaries of what’s attainable, NVIDIA demonstrated three revolutionary strategies in a particular part of the benchmarks known as the open division, created for testing superior AI strategies.

NVIDIA engineers used a way known as structured sparsity — a means of decreasing calculations, first launched with NVIDIA A100 Tensor Core GPUs — to ship as much as 33% speedups on inference with Llama 2.

A second open division take a look at discovered inference speedups of as much as 40% utilizing pruning, a means of simplifying an AI mannequin — on this case, an LLM — to extend inference throughput.

Lastly, an optimization known as DeepCache diminished the mathematics required for inference with the Steady Diffusion XL mannequin, accelerating efficiency by a whopping 74%.

All these outcomes have been run on NVIDIA H100 Tensor Core GPUs.

A Trusted Supply for Customers

MLPerf’s checks are clear and goal, so customers can depend on the outcomes to make knowledgeable shopping for selections.

NVIDIA’s companions take part in MLPerf as a result of they realize it’s a priceless instrument for purchasers evaluating AI programs and companies. Companions submitting outcomes on the NVIDIA AI platform on this spherical included ASUS, Cisco, Dell Applied sciences, Fujitsu, GIGABYTE, Google, Hewlett Packard Enterprise, Lenovo, Microsoft Azure, Oracle, QCT, Supermicro, VMware (not too long ago acquired by Broadcom) and Wiwynn.

All of the software program NVIDIA used within the checks is offered within the MLPerf repository. These optimizations are repeatedly folded into containers accessible on NGC, NVIDIA’s software program hub for GPU functions, in addition to NVIDIA AI Enterprise — a safe, supported platform that features NIM inference microservices.

The Subsequent Massive Factor

The use circumstances, mannequin sizes and datasets for generative AI proceed to increase. That’s why MLPerf continues to evolve, including real-world checks with well-liked fashions like Llama 2 70B and Steady Diffusion XL.

Retaining tempo with the explosion in LLM mannequin sizes, NVIDIA founder and CEO Jensen Huang introduced final week at GTC that the NVIDIA Blackwell structure GPUs will ship new ranges of efficiency required for the multitrillion-parameter AI fashions.

Inference for big language fashions is tough, requiring each experience and the full-stack structure NVIDIA demonstrated on MLPerf with Hopper structure GPUs and TensorRT-LLM. There’s way more to come back.

Study extra about MLPerf benchmarks and the technical particulars of this inference spherical.